The idea in a nutshell: Voice recognition is a year or two away from being able to understand millions of words, spoken by an individual in a noisy circumstance, better than a human can. At that point, the number of searches we do with voice and our expectations of the companies we interact with, will change dramatically. The ramifications for the way we interact with companies are enormous.

What computers can ‘understand’ is almost everything.

As usual, Mary Meeker’s 2016 slides keep leaking a great deal of value. This is one of those slides, the significance of which I missed the first time I ran through them:

Luckily, as this article others caught it and have offered some extremely intelligent analysis of it.

In short, computers will be able to understand almost everything said to them soon, even if the person saying them is in a car, a crowded room or watching a blaring TV in their own front room.

As the article points out:

“Badiu’s chief scientist Andrew Ng says “as speech recognition accuracy goes from 95 percent to 99 percent, all of us in the room will go from barely using it to using it all the time.” Ng also estimates that 50 percent of all web searches will be voice-powered by 2019.”

The article also points out some interesting use cases which cause far from optimal experiences in situations where the person is using voice to interact with a company. It’s well worth a read.

Voice as part of a change in interfaces to the web

The ‘arrival’ of standout voice recognition technology comes to us at the same time as Virtual Assistants and bots, both of which I’ve written about extensively in this blog.

What interests me most about the intersection of these things is the very different experience that people will be having with the internet in the next few years.

Where we are now – features of interacting with the internet

Now : Humans are the ‘agents’

- People prioritise their own tasks all day, from the minute they get up until the minute they get to sleep at night.

- Little proactivity: There are a very small number of proactive notifications that we receive each day. An alarm in the morning, perhaps, some push notifications from your phone. You might get an email from a website you signed up to a while ago in the morning, scan it and delete it. Your telco could send you an SMS to say that 24 hours ago you exceeded your data bundle.

- User initiated tasks: At the moment, save for a few coke machines, all tasks undertaken on the internet are user initiated.

- Individuals pull information: If you want something, you go to Google and search for it and then pick something from the list of things you’re looking at. To interact with a company, you go to a destination.

- Interactions are almost all text based: Interactions with companies on the internet are largely text based. At the moment, 20% of internet searches are, I am told, voice based. 80%, the Pareto majority, are text / typing. If I go to one of these destination websites, I am far more likely to read it and then email it or have an Instant Messenger chat with it than I am to call a phone number on it. And if I do call a phone number (unless it’s click to chat VoIP session) I am stepping off the internet into another channel.

The next phase of the internet

Most of these features are going to change over the next 2 years:

Moving towards ‘The Intelligent Internet’

Agency will change

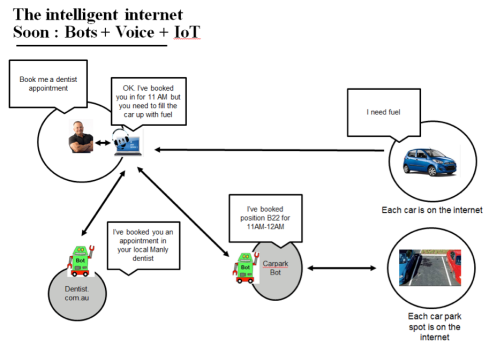

- Critically here, agency changes: Agency in the sense of decision making and execution of some of the tasks I used to spend my time on – has changed. The internet has become ‘intelligent’ in some very basic but still significant ways. The low level task activity I currently spend a lot of my time on – of booking meetings with colleagues, the rigmarole of finding a parking spot (see below for fictitious / future version) are done for me.

- The interface between user and internet has changed: Destinations, apps and websites, will still exist but interactions with brands / companies will be more voice and bot based. The use of voice means I can be authenticated biometrically as the ATO already do. I speak many of my requests and receive verbal answers. I do not visit a .com.au domain to do that although I am still interacting with the brand and I am still online.

Above: (Some) Agency has moved to the internet. Individuals have less to remember. The interface has changed, I am now talking rather that writing my queries.

Summing up – 3 friction points removed

Examining the three key components of this imminent change, a lot of friction has disappeared. I suspect that increases the likelihood it will occur.

- Less to remember: Where once I needed to remember a bunch of passwords in order to access stuff, I can now use my voice to identify myself biometrically. Any biometric authentication provides a percentage likelihood that the individual has been correctly identified. It does not provide a ‘yes, this is her’ guarantee. However, second factor authentication can help. Imaging logging in to everything with a whispered word…..

- Voice is 50% of my interaction: Voice interactions might make up only half of what we do on the internet. As the article I linked to above points out, there are many use cases for voice interaction which are impractical. Take for example asking your virtual assistant what your bank balance is – you may not want a voice response to that. But people (generally) speak faster than they type and now, half of their interaction with your brand do not involve your app or website.

- The basic stuff is all done automatically: The movement of some aspects of agency to the internet frees up some of my time for ‘more economically worthwhile pursuits.’